Coding from the outside

Nico commented on The Bug, saying that he tends to put more discipline into his leisure time coding than he might put into his work. Although my original post might give you the idea that my own leisure time coding is an undisciplined mess, this is not entirely the case. I have one rule that helps to keep my code re-usable, well designed, on target and generally clean: Code from the outside.

If I am writing audio code, I'll write as much as possible without connecting a soundcard. Likewise, I will not link against bit blitting libraries until most of my graphics code is done. This is somewhat similar to starting with test cases, but not as strict. It means writing your code, manually inspecting the output and debugging without getting caught up with artifacts.

I originally learnt this when I was doing a rotation at a remote hospital back in Melbourne. I had borrowed my father's laptop but never got the obscure soundcard working with my Linux install and so when I decided to write a synth, I could only check the output when I returned home after a few months. Despite the fairly taxing nature of working the ER of a small country hospital, I was able to get a few hours of coding done every few days. It was a good escape from tractor related injuries and whatnot. Aside from my computer, I had a copy of some audio synthesis text, which was later to be stolen by a sound engineer when I left medicine to work in post production; but that's a story for another day.

Long story short, I was able to code up the system as I had intended and I wasn't distracted by the output along the way. In the past, whenever I wrote music in software, I'd get caught up in the sounds and spend hours tweaking my code, iterating between code and output. Along the way I would forget my original intent and would end up focused on aesthetics. While this is mildly pleasing, it is not as pleasurable as sticking to your original question and answering it.

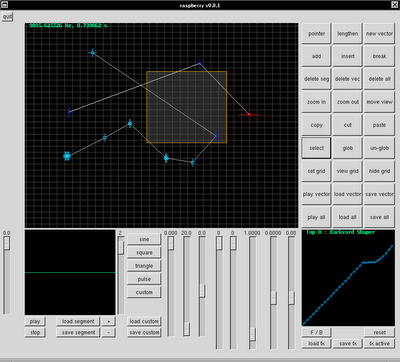

At the time my original question was "What would sounds generated by drawing a vector spectrogram sound like?". In the end, the answer was something along the lines of "Crap", but at least I learnt that. If I was hooked into a soundcard, I would have been too concerned with making something that didn't sound crap that I would have never answered my original question.

I also find that when coding directly to output, I get caught into the trap of finding bugs in the output and guessing what the underlying cause is rather than properly diagnose the problem. It is much easier to find bugs in text output than it is with audio or visual output. I think that I am smart enough to look at a complex rendering output snafu and say "Duh. Off by one error". The truth is that I am not that smart and despite this I end up randomly adding +/- 1 to an iterator somewhere and seeing if that fixes the problem. I'm somewhat simplifying the situation, but I think all coders have similar behavior. It's much harder to lie to yourself when you can clearly see the underlying mechanisms in operation.

When opaque debugging coincides with a certain aesthetic I find myself adding one to an iterator, looking at the output and being surprised by the output and saying "Wow. That's kinda cool. What if I add 2?!" Two hours later, after clocking my iterators to sin((i % 17) * 0.01), my rendering looks amazing, but nothing like what I originally intended. While this might be OK if I'm working on an animation for its own sake, but this is rarely the case. Better to separate the aesthetic phase from the analytic phase.

Or so I say anyway.

In unrelated news, Dan is blogging. Watch that space.

0 Comments:

Post a Comment

Subscribe to Post Comments [Atom]

<< Home